Paper on regionalization of compositional data

Posted December 07, 2025

We’ve got a new paper out that grew partly out of our ongoing interest in bioacoustics, machine learning, and how to actually do inference with the probability vectors that classifiers produce.

A lot of modern ecological data now come from machine-learning classifiers. Instead of saying “this sound is drumming,” the model says something like “60% drumming, 30% whinny, 10% pik.” Those probability vectors are compositional data, and they’re surprisingly hard to analyze correctly—especially in space.

The paper develops a new Bayesian spatial model that works directly with these probability vectors, without forcing awkward transformations or zero “fixes.” The model:

- Works naturally with probabilities that sum to 1

- Allows zero values

- Allows positive correlations among components

- And propagates uncertainty from the classifier into the spatial model

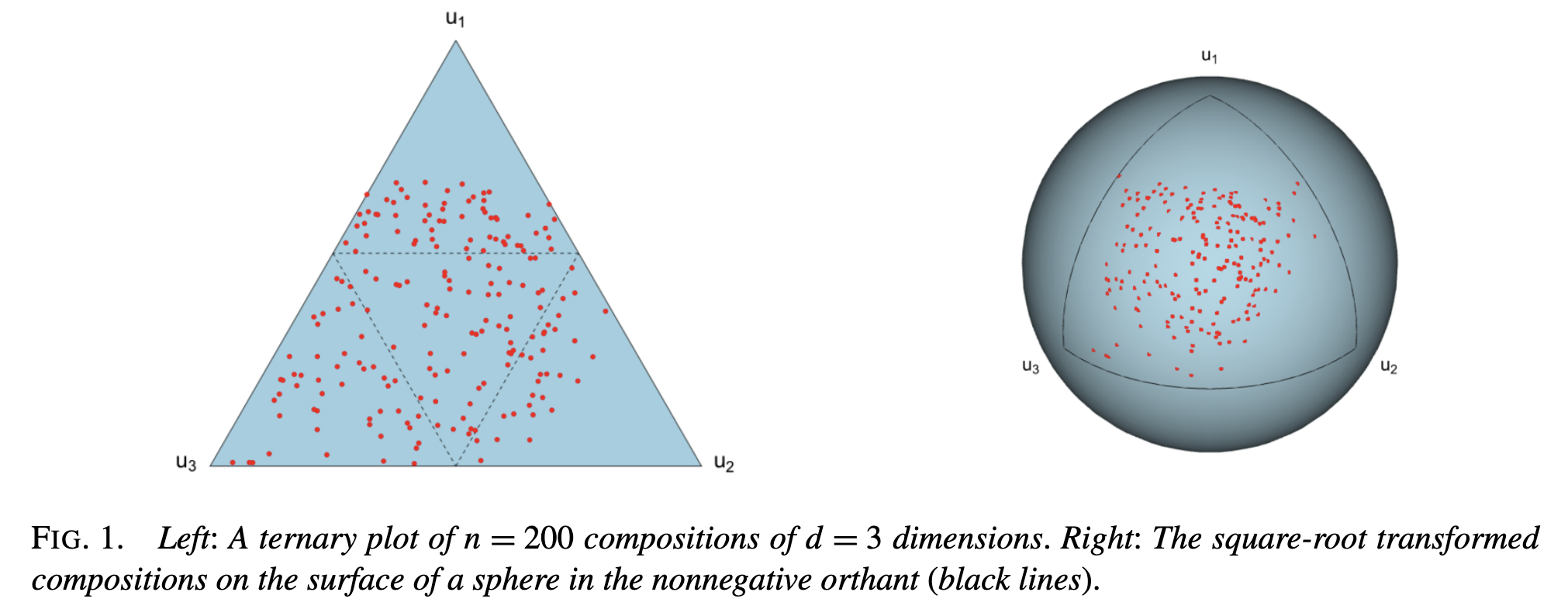

Under the hood it uses a spherical (hyperspheric) representation of compositions and a spatial regression framework built on that geometry.

Here’s the basic geometric idea behind the transformation:

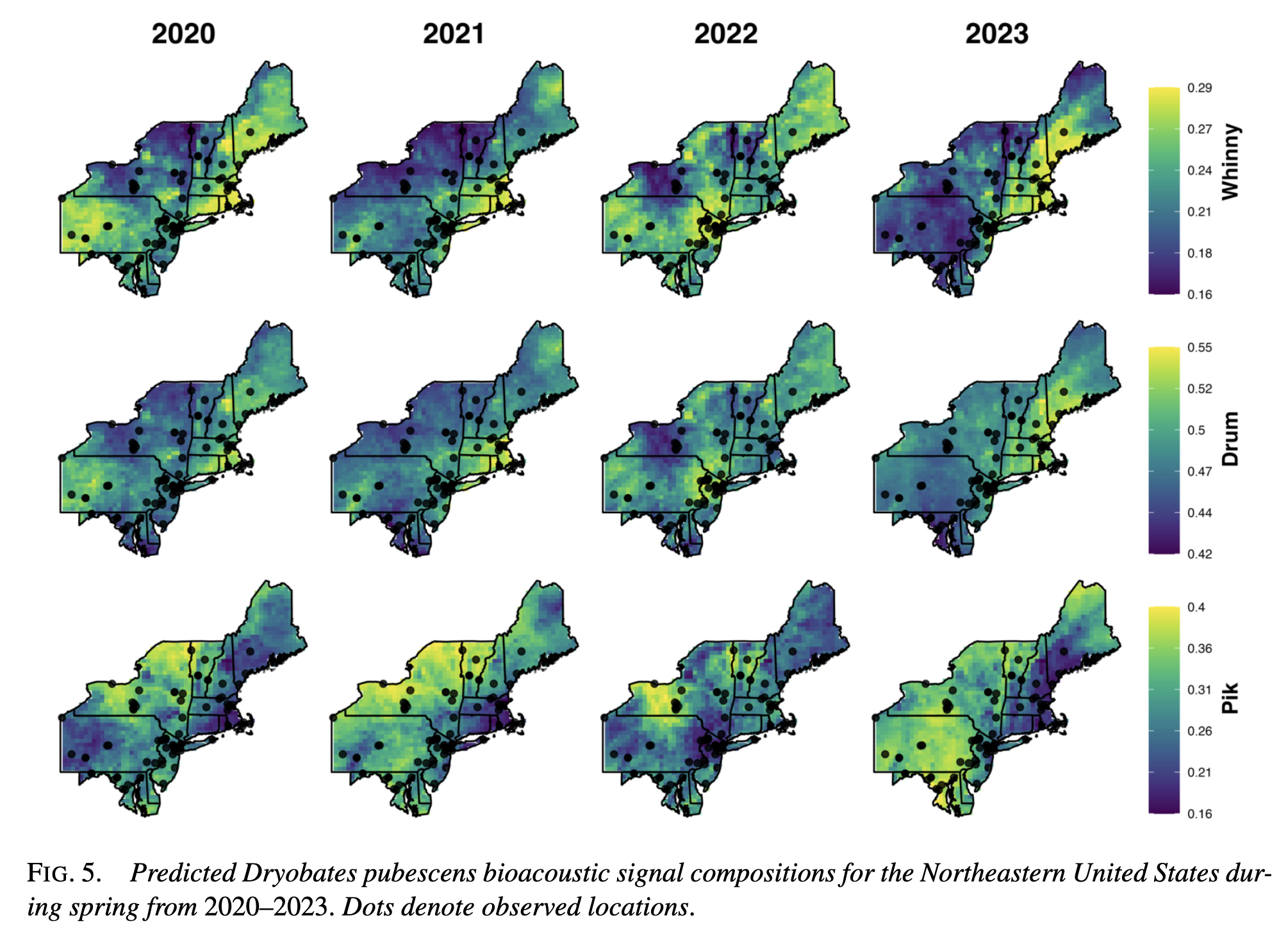

We applied the model to downy woodpecker (Dryobates pubescens) recordings from the northeastern U.S., classified with a convolutional neural network into three vocalization types:

- Drumming

- Whinnying

- “Pik” calls

Using spring temperature and precipitation as covariates, the model predicts the spatial distribution of vocal behavior probabilities for each year from 2020–2023.

A few broad patterns popped out:

- Drumming is most common overall and tends to be higher in cooler, wetter regions

- Whinnying increases with higher precipitation, especially in warm areas

- Pik calls tend to increase in drier regions, partly as a compositional offset

These are predictions of classification probabilities, not direct behavioral observations—but they give a spatially coherent picture of how vocal activity tracks climate.

From my perspective, the main contribution is that it gives us a principled way to connect:

machine learning → uncertainty → spatial ecological inference.

That pipeline is becoming unavoidable in sensor-based ecology, and this work finally treats the classifier output as proper statistical data rather than something to be thresholded and forgotten.

If you’re into spatial stats, compositional data, bioacoustics, or just how AI outputs get used downstream, you might find it interesting.